Existing works on prosthetic hands focus on increasing dexterity by carrying out functional tasks.

Achieving specific hand movements, such as pointing the index finger, are desired but research on generating the hand movement itself has yet to be widely explored.

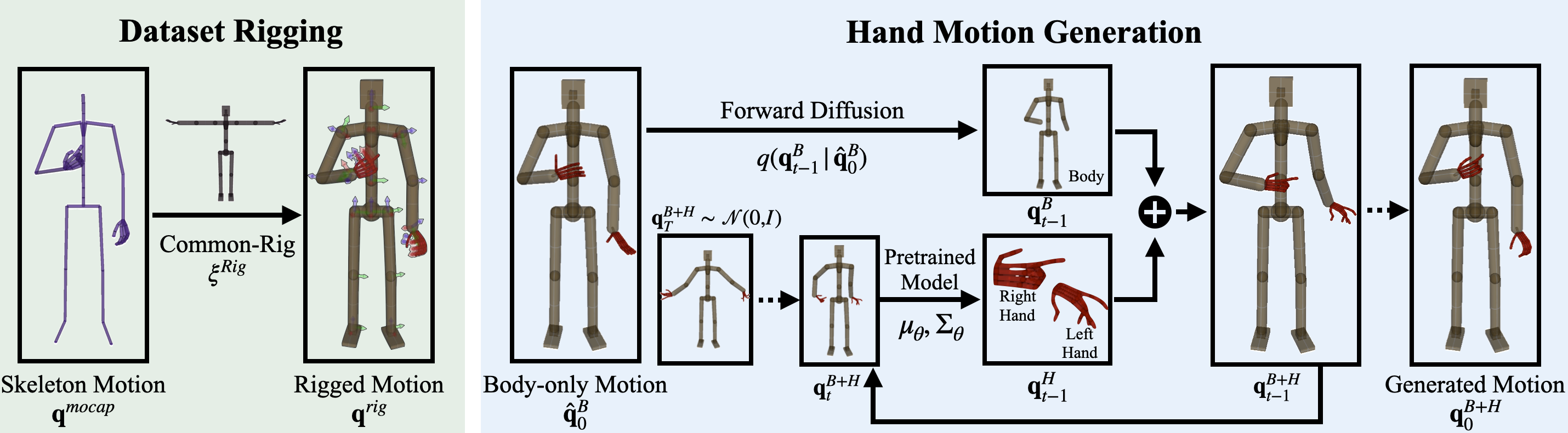

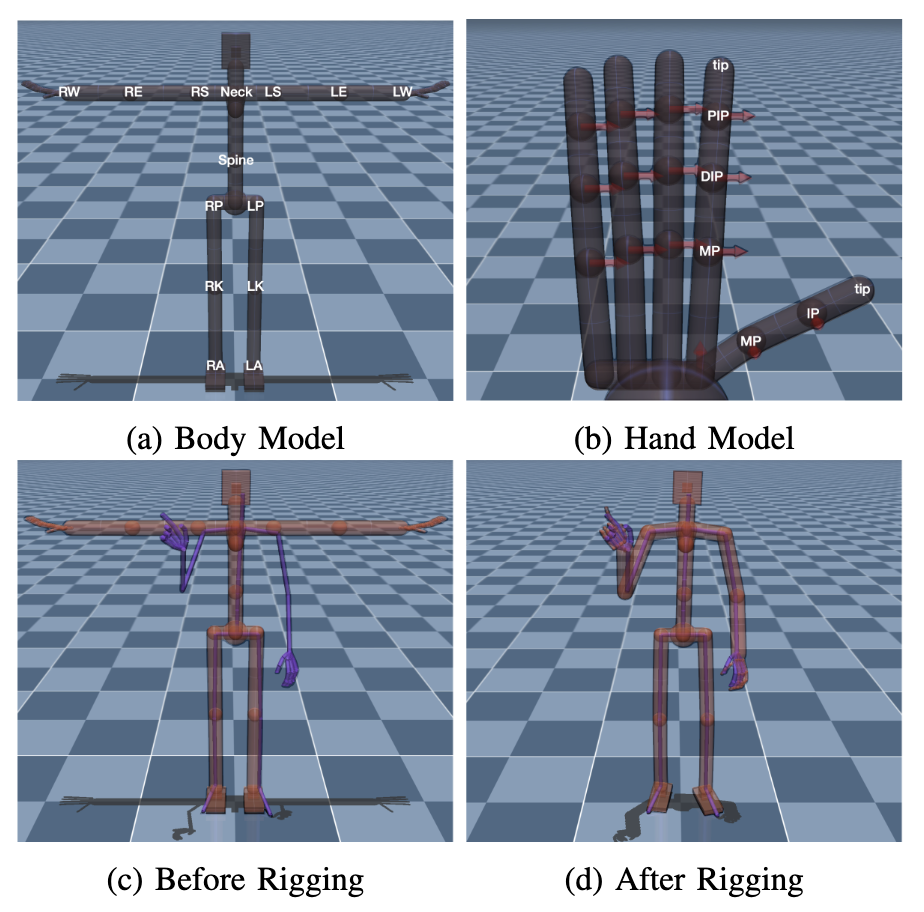

In this work, we propose a pipeline for generating hand motion from body motion via using the Common-Rig, a kinematic rig representation for effective motion representation, and a diffusion-based inpainting method, which has shown strengths in generalization and stability.

Common rigging is applied to a motion capture dataset with both body and hands information, and hand motions are generated while conditioned on the body motions of a hand-zeroed test set.

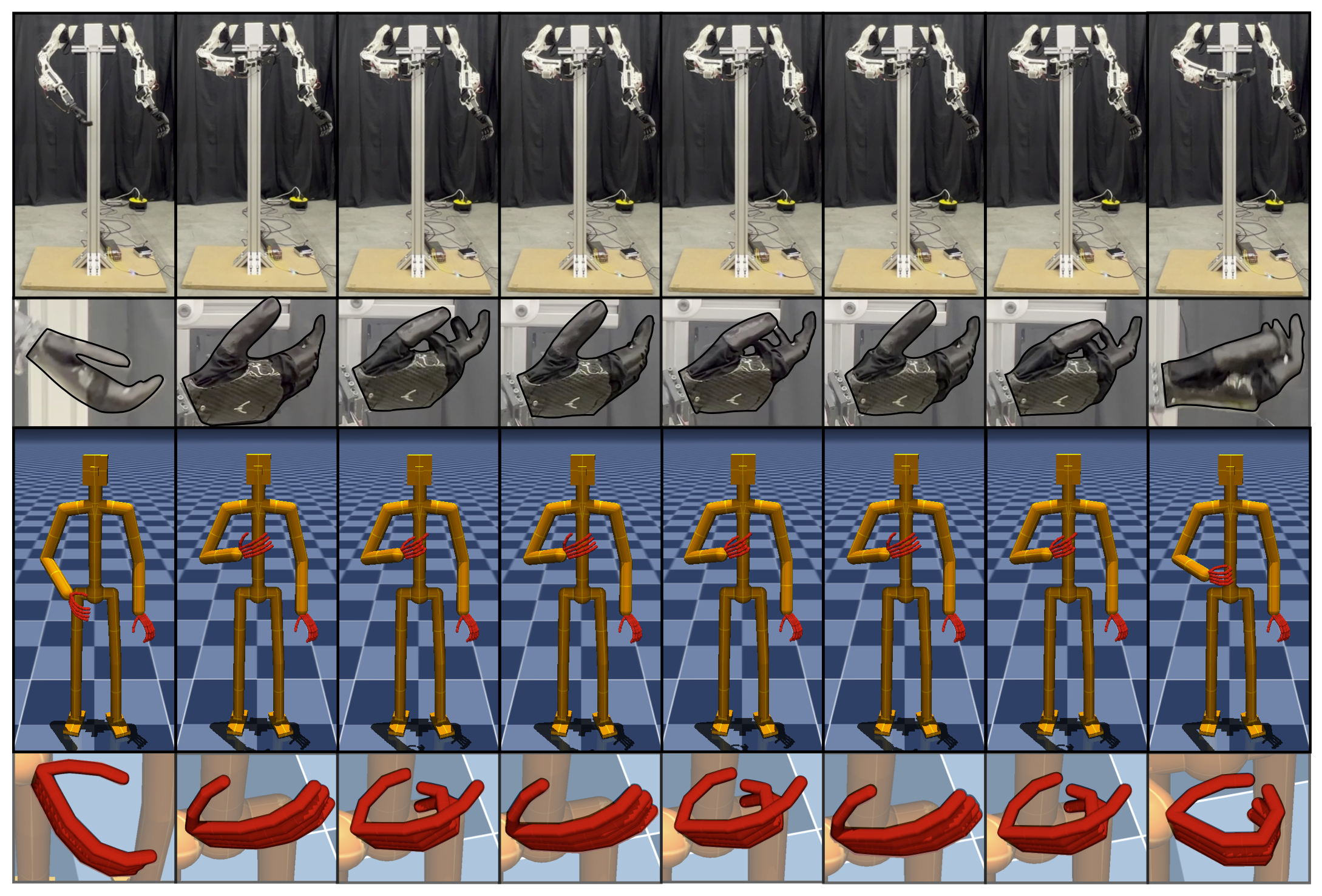

The generated results of our proposed method, compared to two baseline methods, attain smaller fingertip positional errors and diversity closer to that of the ground truth.

In addition, the generated motions are implemented on a real robotic system with prosthetic hands for evaluation.